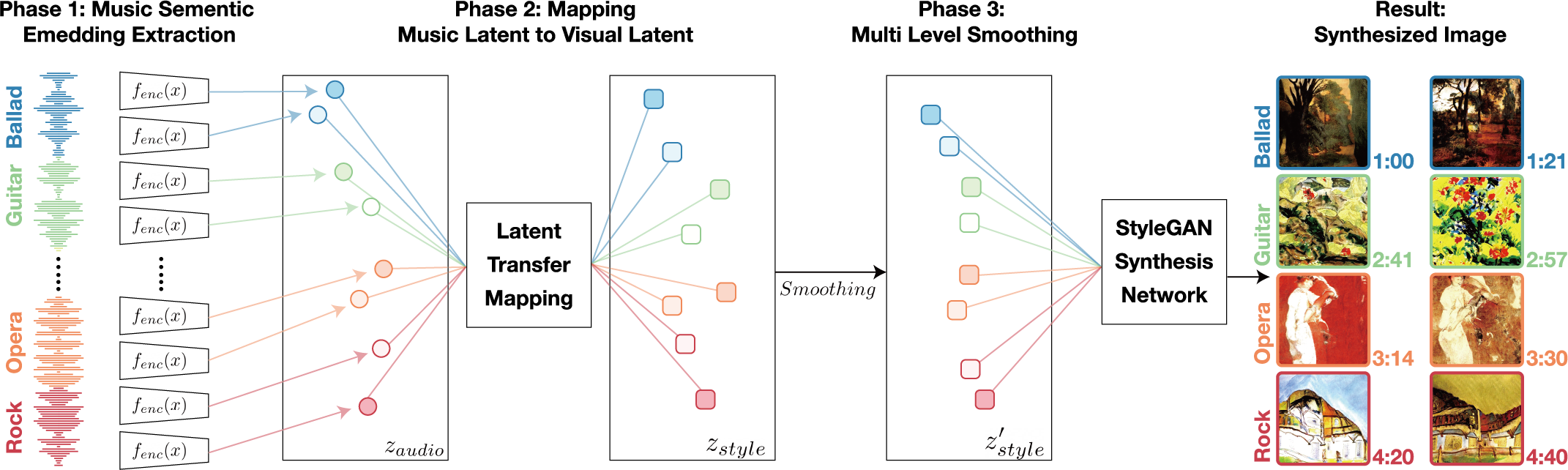

Our goal is to generate a visually appealing video that responds to music with a neural network so that each frame of the video represents the musical characteristics of the corresponding audio clip. To achieve the goal, we propose a neural music visualizer directly mapping deep music embeddings to style embeddings of StyleGAN, named TräumerAI.

Figure.1 System diagram and generated images from Queen's Bohemian Rhapsody

Generated Videos

Below are the generated results of TräumerAI. The mapping between music and image is manually labeled with 100 pairs. The images are generated by StyleGAN2 pre-trained with WikiArt (https://github.com/pbaylies/stylegan2).

The labeled dataset does not include any music from the artists included in this demo.

With modified smoothing and higher fps

Luis Fonsi - Despacito

John Williams - Star Wars Main Title

Wagner - Ride of Valkyries

Queen - Bohemian Rhapsody

Queen - Bohemian Rhapsody (PhilharmoniX version)

Schumann - Träumerei

Old Examples

Schumann - Träumerei

Queen - Bohemian Rhapsody

BTS - Dynamite

Avicii - Waiting for Love

Adele - Hello